Data Quality Standards

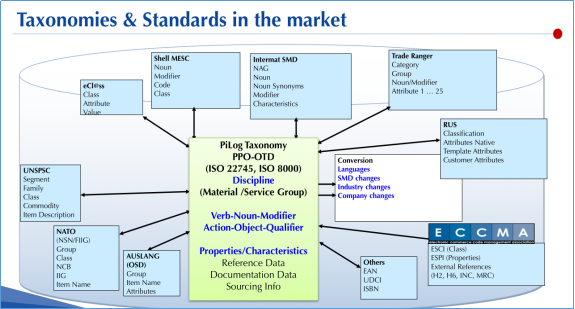

The International Organization for Standardization (ISO) approved a set of standards for data

quality as it relates to the exchange of master data between organizations and systems. These

are primarily defined in the ISO 8000-110, -120, -130, -140 and the ISO 22745-10, -30 and -40

standards. Although these standards were originally inspired by the business of replacement

parts cataloguing, the standards potentially have a much broader application. The ISO 8000

standards are high level requirements that do not prescribe any specific syntax or semantics. On

the other hand, the ISO 22745 standards are for a specific implementation of the ISO 8000

standards in extensible markup language (XML) and are aimed primarily at parts cataloguing and

industrial suppliers

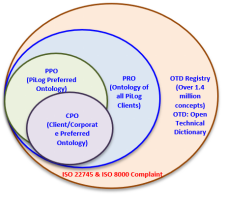

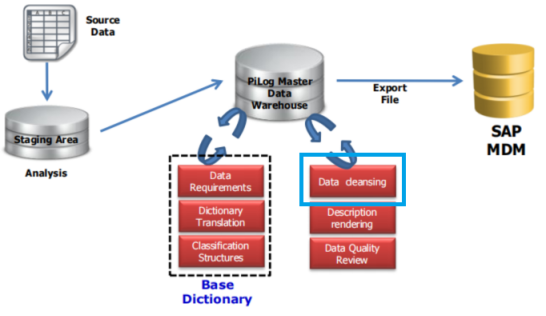

ICS Data Harmonization processes & methodologies complies to ISO 8000 & ISO 22745 standards

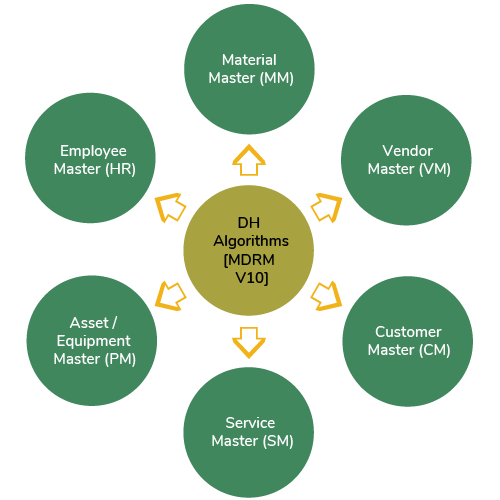

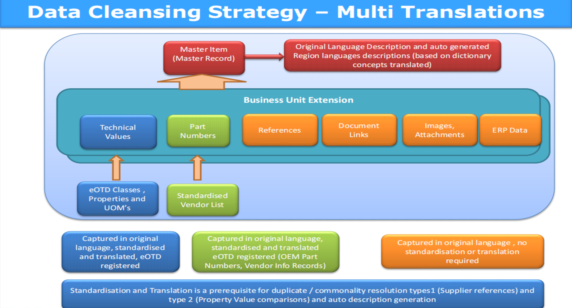

ICS utilizes the ICS Preferred Ontology (IPO) when structuring and cleansing Material,

Asset/Equipment & Services Master records ensuring data deliverables comply with the ISO 8000

methodology, processes & standards for Syntax, Semantics, Accuracy, Provenance and Completeness